Hi,

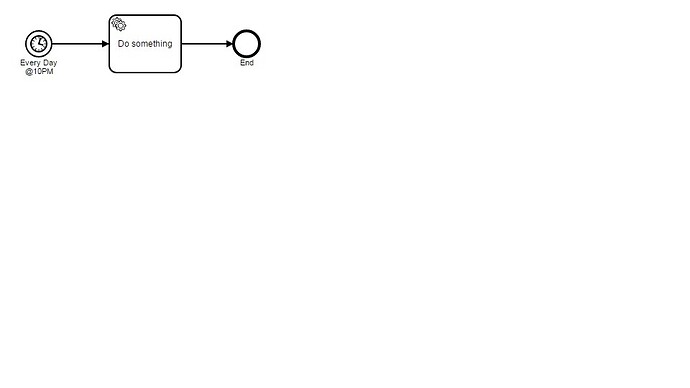

I want to invoke process instance hourly/daily as dynamic. I am using timer start event with Timer Definition Type as Cycle and Timer Definition as hard code value 0 0 22 * * ?. Can we change this value as dynamic?

Currently it’s static value and running 10pm everyday, but we want to set that value dynamic and update whenever we want to change the run time to 8pm or 9pm. what is the best way to achieve this?

Thanks,

Srinivas N

I ran into the same issue with Camunda v7.5. A dynamic cycle time wasn’t available for a ‘start-timer-event’ (specifically “start”).

I ran into a few other issues with using a start-event-timer. And, these primarily had to do with development and testing. I couldn’t figure out a method to dynamically (i.e. via API and/or rest call) disable the thing - sort’of drove the QA team into a quiet place…

I ended up using quartz - and… not being a huge fan of quartz, I used the components provided within Apache Camel to help drive/configure duration. A very slick feature with the Camel quartz component is that it offers nicely verbose logging as the timers start up.

Next step after setting up Camel-quartz was to simply capture the output events, and via Camunda’s Java-API, fire off various process start-events. I setup a camel route that called on a java-bean. The java-bean then made direct Camunda java-api calls… starting/checking/etc. process models.

I think Hawtio plugs right into Camel - this enabling direct control of various services such as quartz. But, that needs to be re-verified.

I’m going out on a limb here, but if my awful memory serves, wouldn’t you simply be able to fire this up and have it wait for the expected period of time and then execute? If it stays in that wait loop, couldn’t you simply send it a message to an intermediate catch event with a payload that changed the value of the timer?

I would swear this timer can be reset and I’m sorry I don’t remember where (or why) I think this, but I had a similar need to make dynamic adjustments to a timer.

In your case your process is simply waiting for a specif condition to be true (i.e. is it time to execute). So if you change that time, then next time it evaluates the current state, it would use the new value.

Again, I apologize for not being more specific, but I jump in and out of BPMN programming infrequently and I more involved with the system “plumbing” and performance of Camunda.

Good luck.

Michael

The problem is that we’re trying to make an event service out of a process instance. Though the process model depends on time, interval, and event services in support of BPMN event features (paradigms?), we shouldn’t ask a BPMN model instance to then behave like one.

This problem becomes highlighted when, what is normally a system function such as an event-service, is embedded within a process-type (defined in BPMN) and finds itself working in concert, side-by-side, with several other unique instances of “self” (BPMN-type).

I’ve referred to this problem as the anti-pattern: service-oriented Functions within a Process.

Specifically, driving process with system functions while embedding these features within the process itself, leads to problems in segmentation, agility, operations, and maintenance.

I realized my post wasn’t relevant here, so I removed it.

I thought I saw your reply notice. And when I went back to read the discussion… it was gone.

I’ve done the same thing.

About timer events though -

I’m working on two articles, timer-events (in general) being the topic of one. I have not-so-good… firsthand experience with the problem space. Meaning refactoring a process after seeing the general fallout, downstream results.

Ideally I’d prefer to simply add some additional functionality to the BPMN “start timer event” and “catch message event” :

-

start timer: The BPM implementation offers a subscription to a more traditional quartz (or other) service. Key value being we then have a centralized time-service demonstrated via something like a HawtIO service console.

-

catch message event: BPM implementation includes a persistent message subscription. Optionally, for example, subscribe to CDI events. And going further past CDI (paradigm) by extending this concept into something like an rx pattern whereby we’re paying particularly close attention to system resources while spreading the work in a more parallel way across computing resources.

The overall goal is to free up Camuna’s BPM-engine to better focus on core BPM function. These edge-case, advanced features come into sharp focus when scaling up to truly massive levels of throughput.

BPMN and CMMN modeling provide an ideal visualization of the solution space. However, we tend to run into “fix everything with a hammer” trap whereby, even though a BPMN model provides the superior architectural view, is not the ideal solution given anticipated capacity plans. A few ‘tweaks’ to notation implementation however turns this all around so that we’re back in the process-aware solution envelope.

The basic timer functionality in the conceptual sense seems to be okay to me. I plan to use them in a more advanced, “decoupled” model that I’m putting together wherein all processes are called via the REST API, so it doesn’t matter where the process is located and we’re not dependent upon the Call Activity to initiate a child process. The parent process execute the asynchronously (I do hope my understanding of that is correct) and then set a catch event to wait for a pre-defined amount of time for a message from the child indicating it has completed or something.

This gets complicated when you want parallel execution of multiple child processes with different handlers on each. Moreover, this fully decoupled model requires rigorous attention to how you pass, store, and evaluate process variables as they cannot be shared as would the case with a Call Activity (There’s an opportunity for a new extension, Call Activity to a different Camunda instance that would function just like a “local” Call Activity".)

As to the system resource issue, I guess it would depend upon the architecture and structure of your processes. The initial start (acceptance of request by the REST API) seems very lightweight and Camunda seems to have no problem accepting very high volumes of messages. The problem is when the process starts executing. Then it depends upon a lot of factors, which we’ve discussed at length elsewhere.

The only way to truly load balance based upon current system resource usage would be to provide something like a closed feedback loop to the job executors that would tell them to stop picking up new jobs if they were heavily loaded or had reached a certain level of load. If the job executor had some sort of external “control” channel, then you could tell it to throttle back based upon any number of external resource constraints, including the database. For example, if you were choked on the disk I/O of your database, you could tell it to queue more work and execute fewer jobs.

Architectures like this can be complicated to both build and configure because it’s hard to account for all the potential resources being used. With closed loop feedback you can get things like hysteresis and potentially unpredictable performance. That said, you could in theory control the job executor(s) such that you maintained an acceptable level of process throughput that did not result in an avalanche of rollbacks.