HI,

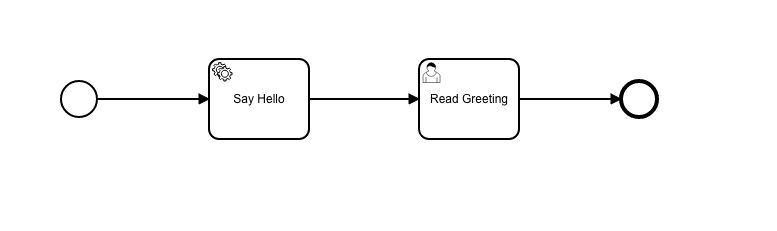

We tried a simple example

Here, SayHello is an external task as a worker in a separate process. For testing purpose we added sleep(10secs)

@Component

@ExternalTaskSubscription("helloService")

class HelloWorker: ExternalTaskHandler {

override fun execute(task: ExternalTask?, externalTaskService: ExternalTaskService?) {

val name = task!!.getVariable<String>("name")

sleep(10000)

externalTaskService!!.complete(task, mapOf("greeting" to "Hello $name!"))

}

}

In our case the process need to run in parallel. But now when I run the above process from the modeler multiple times (clicking the run button say 20 times in a sec :)) …and we notice that the external tasks are being run sequentially.

So what is the way to get the process run parallel and then these external tasks be parallel as well ?

Hi @sherry-ummen,

I think the easiest way is to set maxTasks to 1 and start multiple instances of your worker in parallel (either on the same or on different computers).

Let the parallelization done by the operation system.

Hope this helps, Ingo

1 Like

Thanks Ingo,

Though I am still confused how maxTask fixes thi. Also we use kubernetes so starting just multiple workers not sure if it’s feasible.

Do you have any example code which I can look into ?

Hello @sherry-ummen ,

when running this on k8s, just scale up the number of nodes.

The maxTasks variable determines how many tasks a worker will lock at once (and then finish them in sequence).

In the scenario Ingo described, every worker will fetch 1 task at once, finish it and then search for the next. So, 10 workers will be able to finish 10 tasks in parallel while not having locked additional tasks.

Hope this helps further

Jonathan

Alright. Thank you Jonathan.

Hmm then it does not solve our purpose. In our case in a given minute there will be 100 to 1000 or even more process running.

It’s not feasible we can keep that many nodes required.

So now I am a bit more confused on how we should model it.

Just to ellaborate a bit.

We have workflows, these workflows can be of a combination of different states. They get triggered when an external event comes from Kafka. All these workflows can access an external service at the same time in a given time.

Now I get this feeling how we use these workers does not suite our purpose. So some tips might be very helpful.

The documentation is massive and sometimes confusing when there are many ways to do same thing. As it comes with a matured product

Hello @sherry-ummen ,

basically, there are 2 ways of doing this:

The one you described first is one of these. The great advantage of external workers is that they are basically microservices can be scaled horizonally.

The other way is using a JavaDelegate. Here, the advantage is that you do not need to connect from outside but this Delegate lives inside your application as bean. From the Delegate, you can do whatever is takes to implement your business logic.

In my opinion, you can stay at the microservices approach and first make a load simulation (with mocked events) to see how well the external workers perform (from what I have seen, this a very performant approach).

Further information can be found here:

https://camunda.com/best-practices/performance-tuning-camunda/

Hope this helps

Jonathan

Thank you. I think I will for now just go with JavaDelegate

Though I bump into another issue, as in my example you see I have added sleep , just to check how Camunda behaves in case of blocking calls. And I run the process from modeler and in its logs I see such error

Starting instance failed [ start-instance-error ]

So why its giving this error ? will this be an issue when there is long blocking call ?

Try to set async before on your service task, so the job will be created at database and executed in another thread when any job executor is free to execute it.