Hello Folks,

I have been reviewing the docs and forums but haven yet found a clear answer to my question regarding the effect of the “jobExecutorActivate” setting in a Heterogeneous Cluster involving more than two servers.

My Spring project contains source code including delegates for two Tenants, the configuration for what should be created by spring is handled using a package scan:

<context:component-scan base-package="com.pcorbett.app.tenantA" />

or

<context:component-scan base-package="com.pcorbett.app.tenantB" />

This means that a single war File will be configured by server Environment Varibables to create either an Instance for TenantA or TenantB.

My spring Application also creates an Embedded Process Engine with the following config for each Application Type (TenantA and TenantB):

<bean id="processEngineConfiguration" class="org.camunda.bpm.engine.spring.SpringProcessEngineConfiguration">

<property name="processEngineName" value="default" />

<property name="dataSource" ref="camundaDataSource" />

<property name="transactionManager" ref="transactionManager" />

<property name="history" value="audit" />

<property name="databaseSchemaUpdate" value="${camunda_databaseSchemaUpdate}" />

<!--

the jobExecutorActivate must be actoivtaed when dealing with wait events, see

https://docs.camunda.org/manual/7.6/user-guide/process-engine/the-job-executor/

-->

<property name="jobExecutorActivate" value="true" />

<!-- get jobs by "ascending due date" so timers are fired earlier -->

<property name="jobExecutorAcquireByPriority" value="false" />

<property name="jobExecutorPreferTimerJobs" value="true" />

<property name="jobExecutorAcquireByDueDate" value="true" />

<!--

Disable batch processing for oracle 10, 11 and in this case 12 -

https://forum.camunda.io/t/fresh-installation-fails-on-startup-oracle-12c/6259/3

-->

<property name="jdbcBatchProcessing" value="false" />

<property name="deploymentResources" value="classpath:${tenant}/*.bpmn" />

<property name="deploymentTenantId" value="${tenant}" />

</bean>

<bean id="processEngine" class="org.camunda.bpm.engine.spring.ProcessEngineFactoryBean">

<property name="processEngineConfiguration" ref="processEngineConfiguration" />

</bean>

This all works as expected and i have the database up and running the Deployments are also defined as being for a specific tenant and most of the time this works well.

In the last few days i have noticed ClassNotFound issues relating to my JavaDelegates similar to This Thread.

The issue is that as i have two deployments and they are not identical (Heterogeneous):

- Application for TenantA with the delegates & bpmn files for TenantA

- Application for TenantB with the delegates & bpmn files for TenantB

I understand that the Job Executor can run into issues if the Job Executor from TenantA Node gets hold of a job that should be handled by the TenantB Node.

To solve this i plan on using <property name="jobExecutorDeploymentAware" value="true" /> in my engine configuration, however how does this affect the scalablity of my application?.

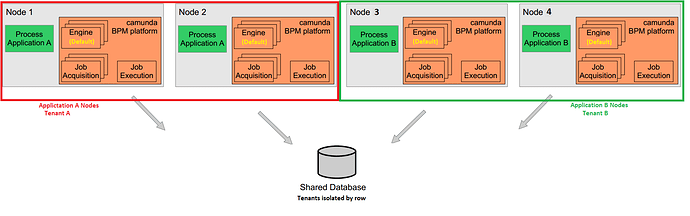

Right now i only have two nodes one of each Application type, what happens when i have two instances of each application like this:

Does the jobExecutorDeploymentAware option bind the Job to a specific Node or to the Deployment → all Nodes where the deployement is available?

I just want to make sure that if a Job is started in Application B Node 3 and that if this Node for whatever reason is not available that the Job will also be picked up and handled by Application B Node 4.