I’m using Camunda with Springboot in a K8s 3 pods env. I’m using Postgres DB as DB.

I have a BPMN workflow. During the execution, the process engine threw the ENGINE-16006 BPMN exceptions. However, the flow seemed to continue to finish without any real impact.

I couldn’t find any related discussion on ENGINE-16006 BPMN error code.

Is this something I should need to worry about? Or I can just ignore the error?

ENGINE-16006 BPMN Stack Trace:

Task_1q1er1j (transition-notify-listener-take, ScopeExecution[27ef6b53-b9c1-11ea-b4bb-7a312e676758], pa=migrationApplication)

Task_1q1er1j, name=Export Data from Mongo

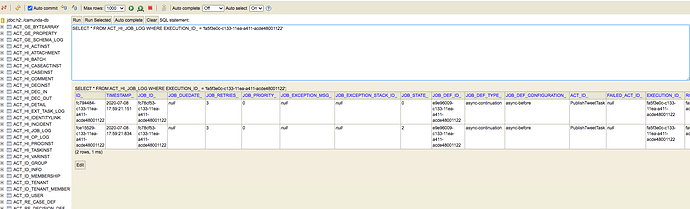

o.c.b.e.e.NullValueException: Cannot find execution with id ‘1331c9d2-b9c4-11ea-b4bb-7a312e676758’ referenced from job ‘MessageEntity[repeat=null, id=576d7b6d-b9c5-11ea-b4bb-7a312e676758, revision=2, duedate=null, lockOwner=0f48ccab-e461-40b9-ad72-5555de076fee, lockExpirationTime=Mon Jun 29 05:09:56 GMT 2020, executionId=1331c9d2-b9c4-11ea-b4bb-7a312e676758, processInstanceId=12fdc238-b9c4-11ea-b4bb-7a312e676758, isExclusive=true, retries=1, jobHandlerType=async-continuation, jobHandlerConfiguration=transition-notify-listener-take$SequenceFlow_0gps8w9, exceptionByteArray=null, exceptionByteArrayId=null, exceptionMessage=null, deploymentId=7b35d6bf-ab84-11ea-8023-d2037c48cdc9]’: execution is null

at s.r.GeneratedConstructorAccessor249.newInstance(Unknown Source)

at s.r.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at j.l.r.Constructor.newInstance(Constructor.java:423)

at o.c.b.e.i.u.EnsureUtil.generateException(EnsureUtil.java:381)

at o.c.b.e.i.u.EnsureUtil.ensureNotNull(EnsureUtil.java:55)

at o.c.b.e.i.u.EnsureUtil.ensureNotNull(EnsureUtil.java:50)

at o.c.b.e.i.p.e.JobEntity.execute(JobEntity.java:120)

at o.c.b.e.i.c.ExecuteJobsCmd.execute(ExecuteJobsCmd.java:109)

at o.c.b.e.i.c.ExecuteJobsCmd.execute(ExecuteJobsCmd.java:42)

at o.c.b.e.i.i.CommandExecutorImpl.execute(CommandExecutorImpl.java:28)

at o.c.b.e.i.i.CommandContextInterceptor.execute(CommandContextInterceptor.java:107)

at o.c.b.e.s.SpringTransactionInterceptor$1.doInTransaction(SpringTransactionInterceptor.java:46)

at o.s.t.s.TransactionTemplate.execute(TransactionTemplate.java:140)

at o.c.b.e.s.SpringTransactionInterceptor.execute(SpringTransactionInterceptor.java:44)

at o.c.b.e.i.i.ProcessApplicationContextInterceptor.execute(ProcessApplicationContextInterceptor.java:70)

at o.c.b.e.i.i.LogInterceptor.execute(LogInterceptor.java:33)

at o.c.b.e.i.j.ExecuteJobHelper.executeJob(ExecuteJobHelper.java:51)

at o.c.b.e.i.j.ExecuteJobHelper.executeJob(ExecuteJobHelper.java:44)

at o.c.b.e.i.j.Execu…